Top Prompt Management Tools

Summary

Effective prompt management enhances model performance, efficiency, and ease of customization for various applications. These tools are essential for developers and organizations to optimize AI workflows, ensuring that AI models generate accurate and relevant outputs.This article covers Zenbase, Helicone, Agenta, and Hamming as prompt management tools.

Key insights:

Efficiency and Consistency: Prompt management tools automate the creation and optimization of prompts, enhancing productivity and ensuring consistent performance across applications.

Scalability and Collaboration: These tools support scalability in managing numerous prompts and models, and facilitate collaboration among team members, enhancing overall project management.

Customization: They offer customization options to tailor prompts to specific project needs, improving the adaptability and effectiveness of AI models.

Zenbase: Focuses on automating prompt engineering, streamlining AI workflows and reducing the need for manual input, which significantly enhances the efficiency of developing AI-driven applications.

Helicone: Provides comprehensive tools for observability and debugging of LLMs, helping developers track requests, analyze performance, and fine-tune AI models to ensure optimal operation and cost-efficiency.

Agenta: Designed for developing and deploying LLM applications, Agenta facilitates collaborative prompt engineering and integrates tools for automated evaluation and human feedback, making it ideal for team-based AI projects.

Hamming: Specializes in prompt optimization and robust evaluation, offering features like prompt CMS, prompt playground, and active monitoring to help teams develop reliable AI applications with minimal oversight.

Pezzo: Enables quick deployment of AI features by managing prompts efficiently, providing observability tools, and optimizing costs and performance for fast-paced development environments.

Langfuse: Supports comprehensive prompt management with observability tools that track performance in real time, and provides compliance features that make it suitable for organizations needing stringent security and data protection.

Introduction

With the rise of Artificial Intelligence (AI) tools in recent years, prompt management tools have become invaluable for optimizing AI workflows and enhancing model performance. These tools facilitate the creation, management, and optimization of prompts - which are crucial for guiding AI models to produce accurate and relevant outputs.

This article aims to provide a comprehensive comparison of top AI prompt management tools. By examining their features, strengths, and ideal use cases, developers and organizations can make an informed decision that aligns with their specific needs.

Prompt Management

1. Definitions and Importance

Prompts are essential in optimizing model outputs, serving as the primary interface between the AI and the user. For example, in the context of Large Language Models (LLMs), even slight variations in prompt wording can lead to drastically different outputs despite conveying the same underlying meaning. By providing clear and precise instructions, prompts help shape the behavior of AI models, ensuring they produce outputs that meet specific user requirements. Effective prompt management not only enhances the performance and reliability of AI systems but also makes them more efficient and easier to fine-tune for diverse applications.

Prompt management tools are specialized software solutions designed to streamline creating, managing, and optimizing prompts that guide AI models in generating accurate and relevant outputs. These tools often include Automated Prompt Engineering which involves leveraging algorithms and an evaluator to generate prompts that improve the AI's output quality and relevance.

The importance of prompt management tools lies in their ability to enhance the productivity of developers, reduce the manual effort involved in crafting and refining prompts, and improve the overall performance of AI models. By automating various aspects of prompt management, these tools help developers focus on higher-level tasks, such as model fine-tuning and deployment.

2. General Benefits

Efficiency: Automating the prompt creation and optimization processes saves time and reduces the workload on developers, allowing for faster iterations and deployment of AI models.

Consistency: Standardizing prompts through these tools ensures consistent quality and performance across different AI applications.

Scalability: Prompt management tools facilitate the handling of many prompts and models, supporting the scalability of AI projects.

Collaboration: Many of these tools offer features that support team collaboration, enabling multiple stakeholders to contribute to and refine prompts effectively.

Customization: These tools provide the flexibility to customize prompts according to specific project requirements, enhancing the adaptability and effectiveness of AI models.

Prompt Management Tools

1. Zenbase

Zenbase is a tool that automates prompt engineering, streamlining AI workflows by reducing the need for manual prompt crafting. It offers an open-source SDK, allowing developers to integrate automated prompt engineering into their projects.

This automation helps create, refine, and optimize prompts - saving time and improving efficiency. Zenbase’s primary feature includes continuous quality optimization and seamless integration into various AI workflows, making it ideal for developers aiming to enhance user experience quickly and efficiently.

Zenbase is currently free to use.

2. Helicone

Helicone is an open-source observability platform designed for logging, monitoring, and debugging LLMs. It provides comprehensive tools for tracking requests, analyzing performance, and running experiments on prompts. Key features include:

Labels and Feedback: Easily segment requests, environments, and more with custom properties to better understand and optimize AI model performance.

Caching: Lower costs and improve performance by configuring cache responses, ensuring faster access to frequently used data.

User Rate Limiting: Implement rate limits on power users by requests, costs, and other metrics to prevent abuse and ensure fair usage.

Alerts: Get notified when your application experiences downtime, slow performance, or other issues, allowing for quick resolution and maintaining reliability.

Key Vault: Securely map and manage your API keys, tokens, and other sensitive information, enhancing the security of your AI operations.

Exporting: Extract, transform, and load your data using Helicone's REST API, webhooks, and more, facilitating easy data management and integration with other tools.

Performance: Benefit from sub-millisecond latency impact, 100% log coverage, and industry-leading query times, ensuring that your AI models run smoothly and efficiently.

Prompt Experiments: Test prompt changes and analyze results with ease. Helicone allows for easy versioning of prompts and comparison of results across datasets, aiding in prompt optimization without affecting production data.

Customer Portal: Share Helicone dashboards with your team and clients. Manage permissions, rate limits, and access, and customize branding and the overall look and feel.

Fine-tuning: Collect feedback and improve model performance over time. Helicone simplifies the process of fine-tuning OpenAI models, collecting and labeling data, and exporting data as CSV or JSONL for further analysis.

These features make Helicone.ai a versatile and powerful tool for managing and optimizing the performance of large language models in various AI applications.

Helicone is free to use for up to 100,000 requests per month. Beyond this limit, they offer a pay-as-you-go plan that can be accessed here. Moreover, organizations can get in touch with Helicone for an enterprise plan that is SOC 2 compliant and allows for on-premise deployment.

3. Agenta

Agenta is an open-source platform aimed at developing, managing, and deploying LLM applications. It offers tools for prompt management, evaluation, human feedback, and observability. Key features include:

Collaborative Prompt Engineering: Enables teamwork in prompt creation and comparison across different LLM providers.

Automated Evaluation Framework: Automatically compares LLM outputs with ground truth data for quality assurance.

Human Feedback Interface: Facilitates A/B testing and quality assessment through human feedback.

Observability Framework: Tracks costs, quality, and response times of LLM applications, ensuring continuous optimization.

Agenta is a developer-first platform that emphasizes streamlining the workflow when building apps powered by LLMs. Its features make it an ideal choice for teams that are looking to quickly build LLM applications.

Agenta offers a free plan that allows for 1 application, 1 seat, and 20 evaluations per month. Teams and enterprises can contact them for a customized plan.

4. Hamming

Hamming is a platform designed to streamline the development and deployment of reliable AI applications, focusing on prompt optimization, active monitoring, and robust evaluation. Here are its key features:

Prompt CMS: Store and version prompts, allowing for easy deployment of prompt changes without needing to modify code. This feature is crucial for maintaining consistency and tracking changes in prompts over time.

Prompt Playground: Test new prompt variants and run evaluations against them using datasets. This sandbox environment helps developers iterate quickly and identify the most effective prompts for their AI models.

Prompt Optimizer: Automatically generates the best prompts for specific tasks, significantly reducing the manual effort required in prompt engineering. This tool helps enhance the performance and reliability of AI models.

Evaluation and Active Monitoring: Hamming provides tools to test AI pipelines on curated datasets, measuring accuracy, tone, hallucinations, precision, and recall. It goes beyond passive monitoring by actively tracking how users interact with AI applications in production, flagging cases that need attention.

Security: Leverages their adversarial datasets to test robustness against prompt-injection attacks.

Support for RAG & AI Agents: The platform offers optimized scores for retrieval-augmented generation (RAG) systems, making it easier to identify and fix bottlenecks. It also simplifies stress-testing for function calls in AI agents.

Collaboration and Experimentation: Hamming supports easy annotation of examples, sharing of experiment results, and production traces. It includes features for manual overrides, tracking hypotheses, proposed changes, and learnings from each experiment.

By offering a comprehensive set of tools for prompt optimization, monitoring, and dataset management, Hamming aims to help product and engineering teams build self-improving AI systems with minimal human oversight, ensuring reliable and high-quality AI outputs.

Hamming’s pricing information is not publicly available on their website. However, interested individuals can book a demo on their website.

5. Pezzo

Pezzo is an open-source AI platform that allows developers to build, test, monitor, and instantly ship AI features. Key features include:

Prompt Management: Manage prompts in one place, utilize version control, and deploy to production instantly.

Observability: Understand exactly what happened, when, and where, optimizing spending, speed, and quality.

Troubleshooting: Inspect prompt executions in real-time, reducing debugging time.

Collaboration: Enables teams to collaborate and deliver impactful AI features in sync.

Cost and Performance Optimization: Continuously optimize AI operations for cost and performance, ensuring efficient resource usage.

Quick Deployment: Ship AI-powered features rapidly, significantly accelerating development cycles.

6. Langfuse

Langfuse is an open-source LLM Engineering platform. It offers a set of tools for managing, versioning, and deploying prompts. Key features include:

Prompt Management: Manage and version prompts to ensure consistency and track changes over time.

Evaluation and Metrics: Evaluate the performance and quality of LLM outputs using user feedback, model-based evaluations, and manual scoring.

Experimentation and Testing: Run experiments and track application behavior before deploying new versions to prevent regressions.

Observability: Provides tools to track and monitor the performance of prompts and applications in real time, ensuring efficient debugging and optimization.

Collaboration: Supports collaborative prompt management, allowing teams to work together effectively on prompt development and optimization.

Compliance: Langfuse Cloud is SOC 2 Type II and ISO 27001 certified and GDPR compliant

Langfuse offers a free plan for up to 50,000 observations per month, a Pro plan at $59 per month for 100,000 observations with unlimited users and dedicated support, and a Team plan starting at $499 per month with unlimited ingestion throughput and additional security and compliance features.

These tools collectively offer a wide range of features and capabilities, catering to different aspects of AI prompt management and optimization. Whether it's automating prompt engineering, running detailed experiments, or managing AI model performance, each tool brings unique strengths to the table, enabling developers to choose the best solutions for their specific needs.

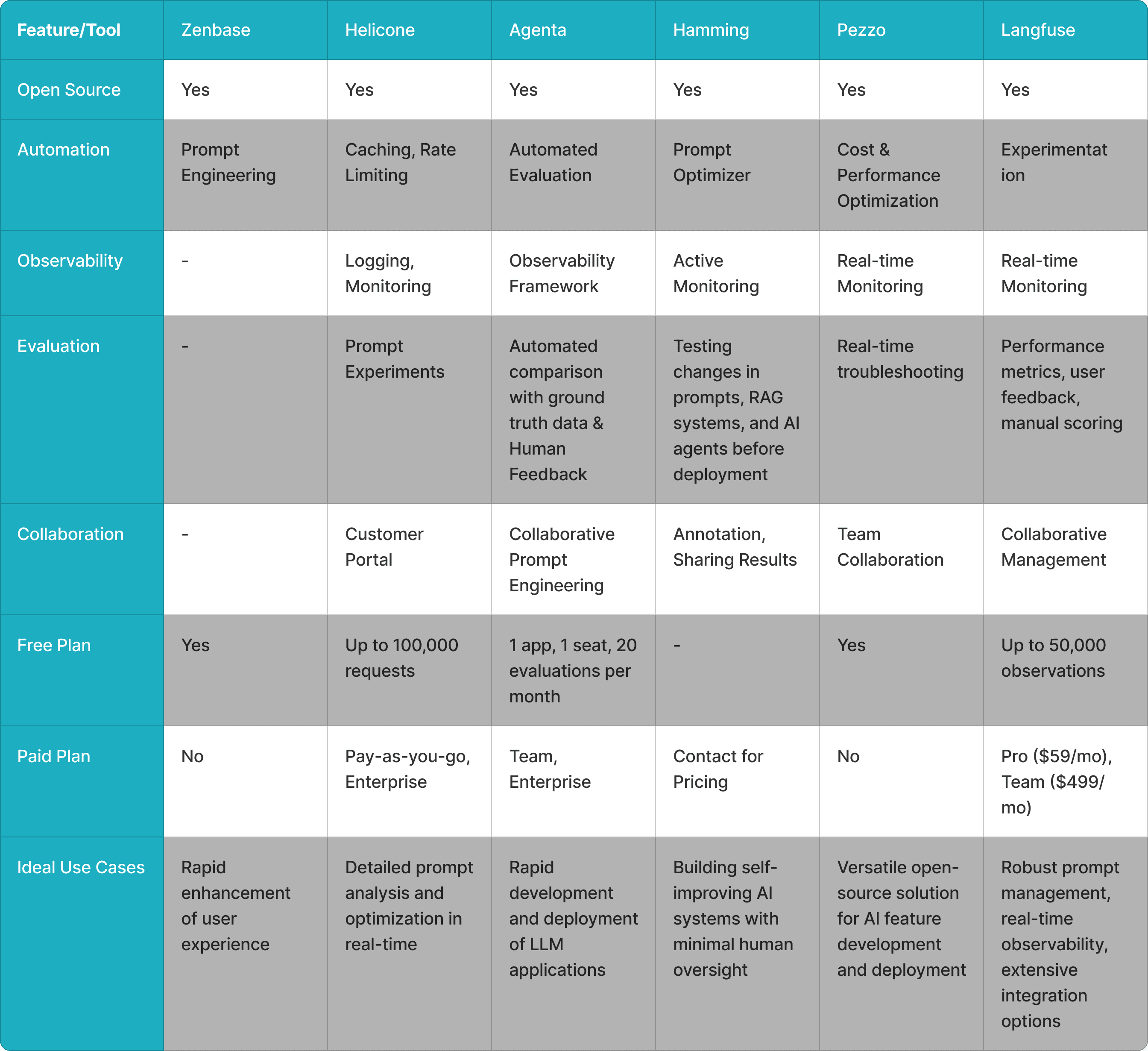

Comparative Analysis

To understand which AI prompt management tool might be best suited for your needs, we will compare the features of Zenbase, Helicone, Agenta, Hamming, Pezzo, and Langfuse. Each of these tools offers unique capabilities tailored to different aspects of AI prompt management and optimization.

Conclusion

In conclusion, selecting the appropriate tool depends on the specific requirements of your project or organization. By carefully considering the features and ideal use cases outlined in this comparison, you can make an informed decision that aligns with your goals and optimizes your workflow. Each tool brings unique capabilities to the table, and understanding these will help you leverage the right solution for your needs.

Authors

Unlock the Power of AI with Walturn

Are you looking to enhance your AI model's performance and streamline your development process? Walturn offers expert guidance and tailored solutions for integrating top AI prompt management tools into your workflow. Whether you need to automate prompt engineering, optimize model outputs, or improve collaboration within your team, we have the expertise to help you succeed. Partner with Walturn to make the most of AI advancements and drive innovation in your organization.

References

“16 Prompt Management Tools and Adoption Best Practices.” Applied AI Tools, 8 Jan. 2024, appliedai.tools/prompt-engineering/prompt-management-tools-and-adoption-best-practices/.

“Agenta - Prompt Management, Evaluation, and Observability for LLM Apps.” Agenta.ai, agenta.ai.

“Hamming - Eval-Driven Development Made Simple.” Hamming, hamming.ai.

“Helicone.” Helicone.ai, www.helicone.ai.

“Introduction.” Hamming, docs.hamming.ai/introduction.

“Langfuse.” Langfuse.com, langfuse.com.

“Launch YC: 🚀 Hamming - Let AI Optimize Your Prompts (Free for 7 Days).” Y Combinator, 21 May 2024, www.ycombinator.com/launches/L4V-hamming-let-ai-optimize-your-prompts-free-for-7-days.

“Prompt Management - Langfuse.” Langfuse.com, langfuse.com/docs/prompts/get-started.

“Prompt Management - Langfuse.” Langfuse.com, langfuse.com/changelog/2024-01-03-prompt-management.

“What Is Prompt Management? Tools, Tips and Best Practices | Qwak.” Www.qwak.com, www.qwak.com/post/prompt-management.

“Zenbase AI | Build and Scale the Best AI Experiences.” Zenbase.ai, zenbase.ai/.

“Zenbase AI: Prompt Engineering, Automated.” Y Combinator, www.ycombinator.com/companies/zenbase-ai.