Secure and Compliant Generative AI for the Public Sector

Summary

This article explores generative AI's potential in the public sector, in applications like document processing, fraud detection, and policy support. It highlights the importance of compliance, security, and data privacy to protect sensitive citizen data. Regulatory frameworks such as FedRAMP, HIPAA, and AI ethics guidelines are key to responsible AI use in government. It also discusses the UAE government’s AI vision and regulatory efforts.

Key insights:

Transformative Potential of Generative AI: Generative AI holds the potential to revolutionize government operations by automating tasks, improving efficiency, and enhancing citizen services. AI can optimize processes like fraud detection, policy support, and public service delivery.

Key Use Cases of Generative AI in Government Agencies: Governments are using generative AI for automating citizen interactions, speeding up document processing, detecting fraud, and supporting policy decisions through advanced data analysis.

Ensuring Compliance with Regulations: Governments must adhere to strict compliance standards such as HIPAA, FISMA, and the Federal AI Bill of Rights to ensure that AI systems are secure, ethical, and transparent in handling sensitive citizen data.

The UAE Case Study: The UAE is leading in AI deployment through its 2031 AI Strategy, focusing on enhancing government services, boosting national competitiveness, and creating strong governance frameworks to ensure responsible AI use.

Ethical and Bias Considerations: Addressing AI biases is crucial, particularly in sensitive areas like criminal justice. AI systems must be designed to prevent reinforcing societal prejudices and ensure equitable and fair outcomes.

Best Practices for AI Compliance, Security, and Data Privacy: Continuous audits, data anonymization, and robust cybersecurity measures are essential for ensuring that AI systems comply with legal requirements and maintain data security.

Achieving Responsible AI Deployment: Responsible AI deployment requires a balance between innovation and ethical considerations. Governments must foster public trust through transparency, regulatory adherence, and by actively mitigating risks like bias and security threats.

Introduction

The potential of generative AI to revolutionize administrative processes and public services is what is generating the increased interest in its application in the public sector. Artificial intelligence (AI) has made significant strides recently, especially in deep learning and generative models such as large language models (LLMs), which allow AI to perform a wide range of government-related jobs. In domains like law enforcement, criminal justice, social services, and administrative process automation, governments are utilizing AI to enhance services via detection, prediction, and simulation. It was reported that by 2020, for instance, 45% of US federal agencies had already conducted AI experiments. This growing use of AI shows how it can improve service efficiency, better address public expectations, and assist in addressing both individual and societal requirements. Though adoption is still in its early stages, the usefulness and societal impact of AI will be greatly influenced by the way it is conceived and applied.

It is impossible to overestimate the importance of compliance, security, and data privacy in government AI programs since these elements are essential to upholding public confidence and guaranteeing responsible AI use. Government AI systems must follow operational guidelines and conform to social norms in order to prevent inadvertently aggravating prejudices or inflicting harm. In order to ensure that sensitive citizen data is protected and AI systems operate securely, governments must provide clear operational processes, technical standards, and legislative frameworks to guide the deployment of AI. One step toward developing an organized approach to AI governance, for instance, is the UK's AI Standards Hub, which covers almost 300 pertinent standards. These steps are essential to guaranteeing that AI systems enhance government operations while adhering to current legislation, acting transparently, and acting morally.

Key Use Cases of Generative AI in Government Agencies

The public sector could undergo a transformation by utilizing generative AI, as it offers the opportunity to improve accuracy, efficiency, and citizen participation. AI is being used by governments around the world to enhance services from national security to administrative work simplification. AI makes government agencies serve citizens better by automating repetitive activities and analyzing massive volumes of data.

The uses of AI go beyond simple automation, they change how governments handle data, engage with the public, and improve public services. The promise of generative AI is found in the capacity to handle large volumes of data, produce insights, and optimize workflows, all of which can result in more effective governance. These are a few examples of generative AI's applications in government:

1. Public Services & Citizen Engagement

Generative AI greatly improves public services and citizen participation, especially when it comes to AI-powered virtual assistants. These virtual assistants, for example, can automate answers to questions from regular citizens about tax services or healthcare. Babylon Health's chatbot in Rwanda helps patients with the triage process, demonstrating how AI can expedite answers to frequently asked questions about healthcare. Similarly, Spain is a prime example of how AI can handle difficult questions spanning languages and services; it used IBM's Watson to respond to inquiries about value-added taxes. Furthermore, AI-powered language translation services—like those utilized by the Minnesota Department of Public Safety—are essential for facilitating smooth communication among diverse communities since they enable multilingualism in a variety of languages, including Somali and Spanish.

2. Document Processing and Analysis

Automating administrative processes, especially those related to processing contracts, reports, and applications, can be revolutionized by generative AI. Governments can improve efficiency and accuracy by streamlining procedures like approving permits or obtaining visas. The U.S. Patent and Trademark Office (USPTO) is an illustration of how AI can help handle massive volumes of information quickly and accurately, as it eliminates human error and speeds up bureaucratic processes. AI is used to scan vast databases to analyze patent applications.

This approach acknowledges that AI carries some hazards, such as potential mistakes and ethical issues, even if it can increase efficiency in processing large volumes of data, such as assessing patent applications or doing prior art searches. Because of this, the USPTO upholds stringent guidelines pertaining to the obligation of candor and good faith, requiring all parties to proceedings to provide any material information pertinent to patentability. The integrity of submissions to the USPTO must not be jeopardized by the employment of AI.

In order to make sure that the implementation of AI does not lead to fraud or deliberate wrongdoing, the Office stresses the significance of human oversight. Any substantial contributions that artificial intelligence makes to the invention process must be disclosed if they have the potential to impact patentability. Moreover, practitioners need to confirm if AI tools used to write papers or claims are accurate and compliant with current patent regulations. Strong intellectual property regulations are ensured by the USPTO whose core value for patent examination is that human practitioners, not AI algorithms, are ultimately responsible for the integrity of the application.

3. Fraud Detection and Prevention

AI models are being used more and more for fraud detection and prevention in sectors like social services and tax fraud. Artificial intelligence is capable of spotting odd trends that could point to fraud, protecting public money, and offering real-time analysis. Cross-analyzing data at speed allows for the early detection of fraud that may have gone undetected, which saves governments large sums of money and guarantees an equitable distribution of social services.

4. Policy Development and Decision-Making Support

Because AI can quickly process large datasets, it is a very useful tool for policymakers. Artificial Intelligence can help develop new laws or rules that take into account the socioeconomic conditions of the present by evaluating data trends and patterns. The U.S. Veterans Administration uses AI to compile veteran comments and spot trends, showing how AI may provide insights that inform policy decisions for bettering public services and making sure new policies are responsive to actual requirements.

5. Law Enforcement & National Security

Because it provides tools for threat intelligence and predictive policing, generative AI is rapidly becoming indispensable in law enforcement and national security. For instance, the U.S. Department of Homeland Security employs AI in a number of security initiatives, such as FEMA's geospatial damage assessments and Customs and Border Protection's autonomous situational awareness AI technologies enable proactive measures that improve border and public safety protection as they predict possible dangers and spotting new patterns in criminal activity or security lapses.

Ensuring Compliance with Regulations

The speed at which artificial intelligence is being incorporated into many industries highlights the necessity of creating strong regulatory frameworks. Ensuring compliance is a fundamental premise that promotes public trust in AI systems, rather than just following the law as it stands. Regulations offer a vital safety net that strikes a balance between the preservation of individual liberties and social norms and innovation. Regulations must change in this dynamic environment where AI's capabilities are always developing in order to guarantee that data use is morally righteous, open, and responsible.

1. Regulatory Framework

A range of laws and standards, including the Federal AI Bill of Rights and industry-specific rules like HIPAA for healthcare and FISMA for government data, influence the legal framework in the United States that governs data usage and AI implementation. Fairness, accountability, and openness are prioritized in the Federal AI Bill of Rights, which was presented by the White House as a framework outlining guidelines intended to shield people from the risks associated with AI systems. Along with safeguards against algorithmic discrimination, it includes steps to guarantee that systems are efficient and safe. AI system developers and implementers are advised to carry out pre-deployment testing, keep an eye on potential hazards, and take into account input from a variety of communities.

HIPAA tightly controls how personal health data is used in the healthcare industry, making sure AI systems in the medical domain respect patient privacy and data security laws. FISMA, on the other hand, makes sure that strict security guidelines are followed when using government data in AI to avoid breaches and illegal access. Furthermore, the National Institute of Standards and Technology (NIST) is essential in developing AI standards and making sure that technology innovations comply with ethical, national security, and safety regulations. NIST's achievements in post-quantum cryptography, for example, have contributed to the establishment of AI standards and offered frameworks for responsible AI deployment and safe data usage.

Protecting consumer rights requires adherence to privacy rules like the California Consumer Privacy Act (CCPA), particularly when AI handles high volumes of personal data. Customers have rights under the CCPA about their data, including the ability to see what personal information is being collected, to have it deleted, and to refuse to have it sold or shared. Other rights, like the ability to update erroneous personal information, were added with the passing of the California Privacy Rights Act (CPRA) in 2023. These regulations make sure that companies using AI systems manage data appropriately. This means that AI developers must create systems that, by default, adhere to privacy laws. Examples of such regulations include limiting data gathering to that which is absolutely essential and guaranteeing transparency in the data's use.

2. Security Considerations for Generative AI

Strong security measures become more necessary as generative AI systems advance. Unprecedented opportunities and serious cybersecurity threats are brought about by these breakthroughs, especially for government applications where sensitive data needs to be shielded from potential cyberattacks and unlawful access. This section looks at the various security issues that arise with generative AI, highlighting the necessity for all-encompassing approaches that include safe APIs, efficient identity management, and encryption.

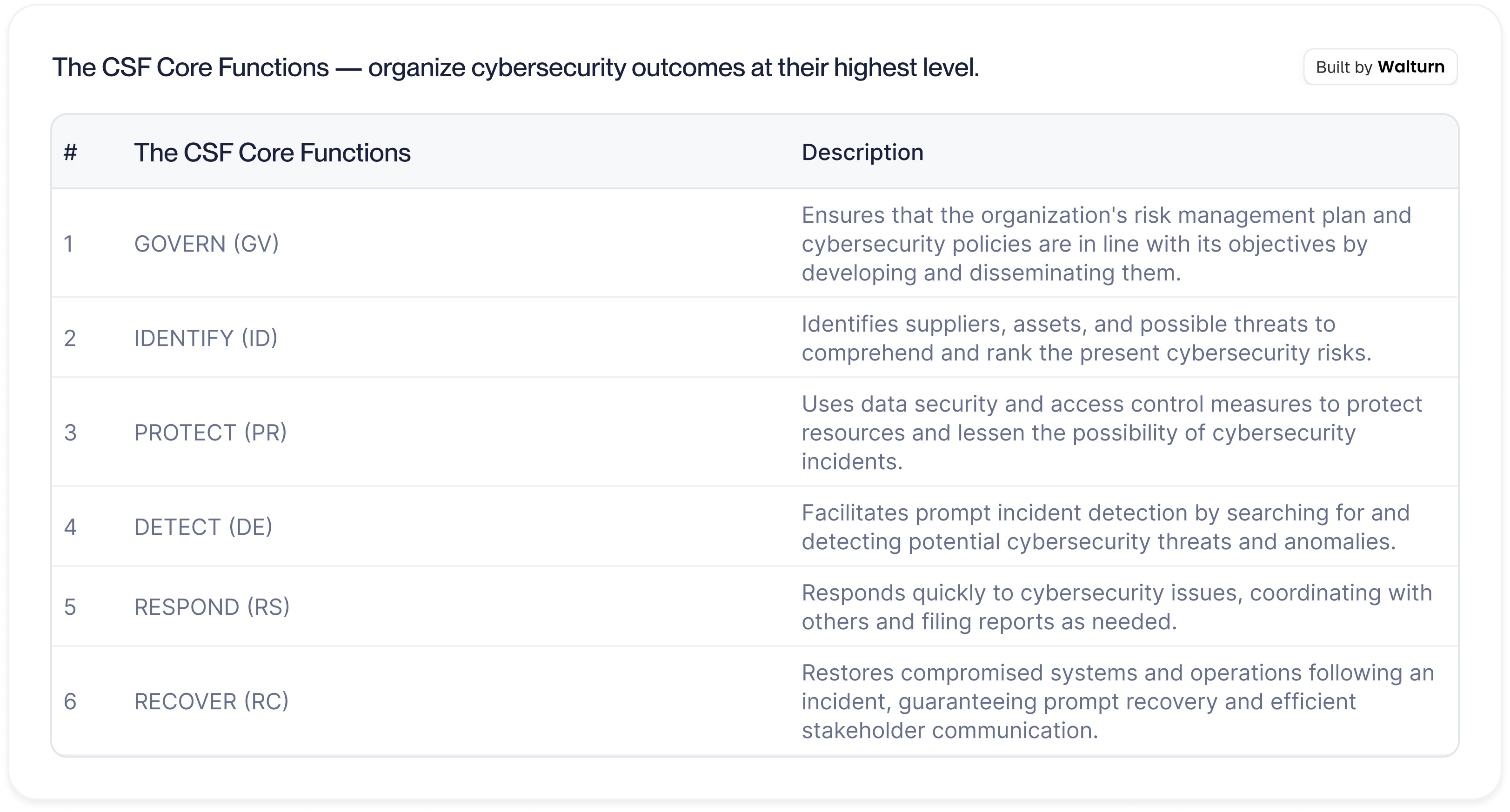

Given the dynamic nature of the threat landscape and the difficulty of mitigating cybersecurity threats, security considerations are becoming increasingly important. Organizations in the AI industry can evaluate, manage, and convey their cybersecurity posture with the use of the Cybersecurity Framework (CSF) 2.0, developed by the National Institute of Standards and Technology. A taxonomy of high-level outcomes classified under the main roles of Govern, Identify, Protect, Detect, Respond, and Recover is presented as follows:

These roles act as a framework for corporations to properly manage their cybersecurity initiatives. Organizations can better match their cybersecurity efforts with their overarching business objectives by fostering a proactive risk management culture through the incorporation of NIST's CSF into AI operations.

3. FedRAMP and Cloud Security Considerations

To ensure compliance with federal security regulations, enterprises using cloud services to implement generative AI applications must get FedRAMP (Federal Risk and Authorization Management Program) authorization. FedRAMP creates a uniform method for continuous monitoring, authorization, and security assessment for cloud services used by the federal government. There are two main ways to go about the authorization process: either ask a federal agency directly for authorization or apply for a provisional authorization through the Joint Authorization Board (JAB).

In order to guarantee that cloud service offerings (CSOs) adhere to the strict guidelines for managing government data, both approaches require thorough security assessments based on the government Information Security Management Act (FISMA) and NIST SP 800-53 security controls. It may be necessary for organizations to work with systems integrators who have experience navigating the federal procurement landscape in order to establish their operational readiness and the sustainability of their services. A successful FedRAMP authorization also improves an organization's credibility and visibility within the FedRAMP Marketplace, which leads to more prospects in the federal market.

In addition to administering this authorization process, the FedRAMP PMO is crucial in offering cloud service providers the tools and assistance they need along the way. All things considered, giving FedRAMP compliance top priority is not only a legal need for businesses hoping to work with federal clients, but it is also a competitive edge that builds confidence and guarantees the protection of private information stored in the cloud.

4. Data Privacy in Generative AI

Strong data privacy rules are becoming more and more necessary as generative AI continues to transform many industries. Governments across the globe are faced with the difficult task of striking a balance between the necessary obligation to protect citizens' personal data and the potential advantages of AI technologies. This section looks at how various government programs can successfully manage data privacy issues while guaranteeing that AI discoveries have a significant positive impact on society.

In order to generate value for the public, government AI projects must put citizens' privacy first while utilizing AI insights. Respect for important laws such as the Privacy Act of 1974 and the General Data Protection Regulation (GDPR) frames this in a fundamental way. Stricter regulations are outlined in the GDPR for the lawful transfer of personal data, highlighting the importance of handling data securely, particularly when interacting with third parties. The legal transfer of data between the EU and approved US entities has been made easier with the implementation of the EU-US Data Privacy Framework, which goes into effect on July 10, 2023. In the absence of this framework, extra precautions, like consent or legally binding corporate rules, are required to guarantee sufficient data protection. The Privacy Act in the United States requires federal agencies to adhere to a fair information practices code. This promotes transparency and trust in government data handling by informing citizens about the collection and use of personal data.

In addition, privacy impact assessments, or PIAs, are essential for evaluating the possible hazards connected to data processing in government artificial intelligence initiatives. PIAs are necessary when data processing poses a high risk to individual rights, as mandated by Article 35 of the GDPR, and they must be recorded before any data processing starts. The need for these assessments is dictated by the identification of high-risk factors, such as the use of sensitive data or the introduction of new technology. Government initiatives can adhere to legal frameworks and actively address citizens' concerns regarding privacy and data security by conducting and updating privacy impact assessments (PIAs) on a regular basis. This will ensure that AI systems provide insights while upholding the integrity of individual rights and public trust.

The UAE Case Study

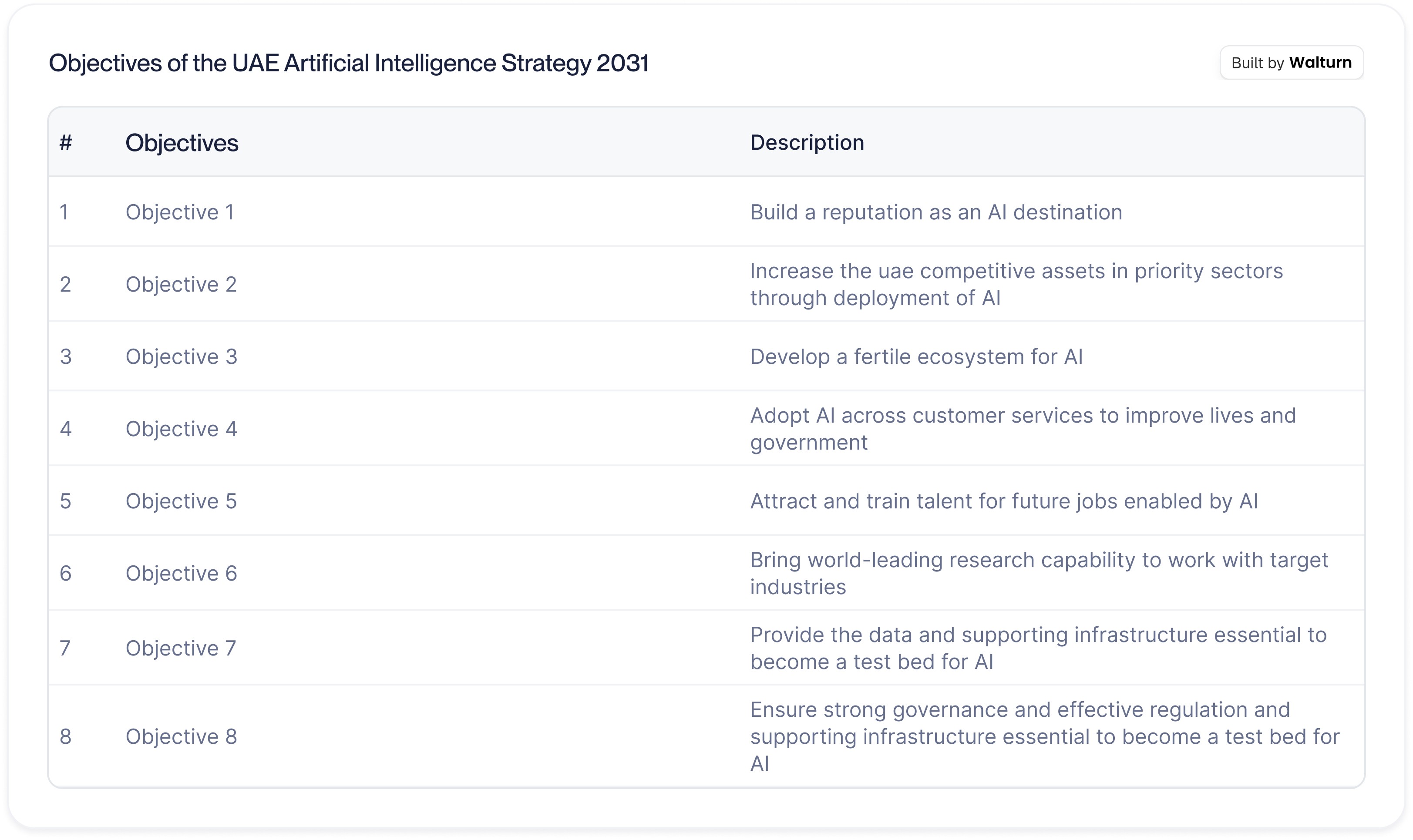

The United Arab Emirates (UAE) stands at the forefront of embracing artificial intelligence, exemplified by its ambitious UAE Artificial Intelligence Strategy 2031, which is in line with the country's overarching goal of leading the world in AI by 2031. This approach is centered on utilizing the nation's advantages, such as its industrial resources and developing industries like logistics and energy, in addition to supporting astute government programs and chances for data sharing and governance. The UAE's emphasis on establishing itself as an AI destination, boosting competitive assets, creating a thriving AI ecosystem, and implementing AI in public services is reflected in the AI Strategy's eight main objectives. The goals are outlined below:

A key component of the UAE's larger digital transformation projects are Smart Dubai and the Dubai 10X program which aim to integrate AI across sectors as well as to improve public services, streamline government processes, and promote innovation. Launched in 2017, the Smart Dubai program seeks to keep Dubai ten years ahead of other global cities and encourages the government to adopt a future-focused perspective.

42 creative projects have arisen as a result of this aim, all of which have significantly changed the way government organizations conduct their business. The objective of Dubai 10X 3.0, the initiative's third phase, is to change the way Dubai's government employees operate in order to promote cooperation and forward-thinking. One of the main goals is to establish a collaborative team from several government sectors to initiate a crucial initiative aimed at enhancing Dubai's quality of life. Moreover, the effort fosters a transition to novel operational frameworks, prioritizing innovation and collaboration in crucial domains including the economy, health, education, and sustainability.

1. UAE Regulatory Framework

In order to effectively manage the application of AI, the strategy also highlights the significance of attracting and educating talent, funding cutting-edge research, and building a strong governance framework. Furthermore, the UAE's Data Protection Law acts as a crucial regulatory tool that guarantees the privacy and security of personal data within the nation. By offering a governance framework for data management, protecting privacy, and guaranteeing adherence to international norms for cross-border data transfer, this law supports the AI framework.

As the official cybersecurity partner for the Dubai AI & Web3 Festival in 2024, the Dubai Electronic Security Center (DESC) has unveiled the Dubai AI Security Policy. By promoting confidence in AI technologies, promoting their advancement, and reducing cybersecurity concerns, this policy represents a critical turning point in the development of the city's artificial intelligence ecosystem. The CEO of DESC, His Excellency Yousuf Al Shaibani, stressed that this policy expedites the deployment of AI in critical sectors and is consistent with the UAE's National Strategy for Artificial Intelligence 2031. Additionally, it backs the more general objectives of the Dubai Economic Agenda (D33), which is to rank Dubai as one of the world's top three economic cities. Dubai's leadership in cybersecurity is reinforced by this policy, which sets clear security criteria to ensure ethical, responsible, and secure AI usage. It also encourages innovation and investment in AI.

2. UAE Perspective on Security Considerations for Generative AI

As the UAE accelerates its adoption of generative AI technologies, ensuring robust cybersecurity measures becomes paramount. Founded in November 2020, the UAE Cybersecurity Council is essential to monitoring the nation's cybersecurity environment, particularly with regard to the safety of AI-related technology. The council is charged with building a comprehensive cybersecurity strategy, which involves securing both current and upcoming technology and establishing a strong legal and regulatory framework to combat various cybercrimes. The council is chaired by the UAE Government's Head of Cybersecurity.

Legislation like Federal Decree Law No. 34 of 2021, which aims to improve protections against online offenses, combat rumors and cybercrimes, and preserve the integrity of government networks, further supports the council's activities. The council also leads programs like the RZAM app, which blocks and identifies harmful websites, and "Cyber Pulse," which educates the public about cybersecurity concerns and best practices. Together, these initiatives strengthen the UAE's cyber resilience and provide a safe atmosphere for both individuals and companies. They lay the groundwork for the integration of AI technology within a secure digital infrastructure.

3. UAE Perspective on Data Privacy in Generative AI

With the passage of the Personal Data Protection Law (Federal Decree Law No. 45 of 2021), the UAE has created a strong framework for data protection that guarantees adherence to global regulations like the GDPR. This law regulates the processing of personal data and requires the consent of the owners for any processing (with the exception of situations where it is required for the public interest or legal duties). It highlights the importance of protecting the privacy and confidentiality of data and gives people the ability to request changes or limit how their data is processed. It is supported by complementary laws such as Federal Law No. 15 of 2020 on Consumer Protection and the Federal Decree Law No. 34 of 2021 on Combatting Rumors and Cybercrimes, and together they create a favorable atmosphere for protecting data rights. This comprehensive approach reflects a commitment to safeguarding individual privacy while promoting a secure digital environment.

Additionally, the UAE is actively utilizing AI to improve public services. Initiatives to incorporate AI into government services are outlined in the UAE's AI Strategy, which aims to increase citizen service delivery and efficiency. The creation of a Chief Executive Officer for Artificial Intelligence, which is in charge of organizing and directing AI activities across ministries, complements this strategic deployment. The United Arab Emirates seeks to transform service delivery and maintain compliance with data protection regulations by utilizing AI technology in an ethical and responsible manner. The UAE has demonstrated its proactive approach to balancing technological progression and data privacy publishing guides on Generative AI and the creation of creative frameworks for AI applications. These developments represent a progressive approach to governance in the digital age.

Ethical and Bias Considerations

Because algorithmic bias in AI can produce unjust and discriminating results that reinforce current societal imbalances, it presents serious ethical challenges, especially in the context of generative AI systems. Research has demonstrated, for example, that criminal justice systems such as COMPAS have biases against particular demographic groups, which raises risk evaluations for members of marginalized populations even when they have not committed any prior offenses. Similar criticism has been leveled at generative AI models like StableDiffusion and DALL-E, which are accused of feeding negative preconceptions by frequently portraying people in ways that support cultural prejudices, such as linking men to leadership positions. These prejudices can lead to people being excluded from opportunities for basic services like work or healthcare, which exacerbates discrimination and rifts in society.

These biases have significant ethical ramifications that emphasize the necessity of accountability and justice in AI research. When biased outputs from generative AI models are generated, they not only mirror but also reinforce cultural prejudices. This influences public opinion and has real-world repercussions like erroneous arrests or restricted access to resources. A complete strategy is required to mitigate these concerns, including the diversification of training data, the use of bias-aware algorithms, and ongoing assessments of AI systems to guarantee ethical integrity and transparency. This all-encompassing approach is essential for reducing algorithmic bias's negative effects and advancing a more equitable AI environment.

Governments should take comprehensive action directed by moral frameworks like the AI ethical guideline of the US Federal Government in order to guarantee AI justice and transparency. This involves putting in place industry-specific guidelines—realizing that a one-size-fits-all approach is insufficient—that address the issues encountered by sectors such as healthcare, e-commerce, and education. Furthermore, as the establishment of pertinent laws and standards frequently lags behind the rapid speed of invention, it is imperative to promote continuing conversations about the emerging ethical implications of AI technologies. Monitoring technology advancements and improving transparency with reference to AI systems can be achieved by close cooperation with civic society. For a worldwide norm to be established, it will also be essential to participate in the establishment of international standards of AI ethics through groups like the Global Partnership on AI (GPAI) and the OECD.

The UK government's initiative to promote safe AI use is also demonstrated by the creation of an AI guide for public agencies. Additionally, utilizing public-benefit private sector projects like Microsoft's Datasheets for Datasets and IBM's AI Fairness 360 tool can yield important resources for reducing the dangers associated with AI. Governmental initiatives and private sector innovations can be combined to create a more transparent and egalitarian AI environment, which will increase public trust and confidence in AI technologies.

Best Practices for AI Compliance, Security, and Data Privacy

Leveraging AI's capabilities while protecting sensitive data from potential breaches and misuse presents a twofold challenge for organizations. The adoption of best practices for AI compliance, security, and data protection is necessary while navigating this complicated terrain.

1. Risk Management and Audits

Conducting frequent audits of AI systems is essential to guarantee adherence to changing rules and guidelines. A strong framework to manage data privacy is required due to the growing complexity of data settings. One such framework is the Security-Centric Enterprise Data Anonymization Governance Model. Sensitive data is safeguarded at every stage of its lifespan because of the security-centric approach to data anonymization.

This paradigm integrates standardized procedures that help government, healthcare, and financial organizations adhere to legal and regulatory obligations. Organizations can find weaknesses in their data handling procedures, evaluate the success of their anonymization strategies, and make sure they are complying with the most recent data protection laws by conducting regular audits. This approach is essential especially if we consider the 62% increase in data breaches recorded between 2020 and 2022, which affected almost 422.1 million people in the United States alone.

2. Data Minimization and Anonymization

Anonymization and data minimization are essential tactics for protecting data privacy without sacrificing its usefulness. For companies that depend on data for research and analysis, this paper highlights how critical it is to remove identifying information from datasets and at the same time preserve usability. To successfully lower the quantity of data required, AI models need a multi-model approach to data anonymization, which might use multiple strategies like data masking, differential privacy, and machine learning. This improves the caliber of data analysis and reduces the chance of disclosing private information. Organizations also need to put in place administrative and technical controls to make sure their anonymization procedures are working. Organizations can improve the security of sensitive data and guarantee adherence to data protection laws by implementing these tactics.

3. Cross-Border Data Sharing Protocols

Significant obstacles stand in the way of cross-border data sharing, especially between nations with disparate data privacy laws and standards, such as the United States and the United Arab Emirates. There is a systematic framework available in the suggested Security-Centric Enterprise Data Anonymization Governance Model that can help with compliance with different regulatory requirements. This model proposes a multi-model strategy that combines different methods to guarantee that cross-border data sharing stays compliant and safe. For example, using blockchain technology and data encryption can help produce tamper-proof records of data access, guaranteeing that data privacy is preserved even when shared globally. It is imperative for organizations to establish unambiguous rules and protections that effectively tackle the intricacies of diverse privacy laws and standards. Organizations can benefit from global data collaboration while guaranteeing the security of sensitive data by putting in place strong cross-border data-sharing protocols.

4. Public Trust and Transparency

When using AI in government entities, transparency, and public trust are crucial. The public must provided with clear information on the applications of AI, advantages, and security measures to secure personal information. Research emphasizes how urgent it is for businesses to implement strong data privacy policies in view of the growing number of data breaches. Organizations may show commitment to safeguarding sensitive data by putting in place a multi-model strategy for data anonymization as we previously discussed. Examples of transparent approaches that promote public trust are public disclosures of data processing procedures and frequent audits. Furthermore, as transparent information regarding the efficacy of data privacy policies can greatly boost public trust in AI systems used for governmental purposes, firms ought to place a high priority on this. This proactive strategy fosters a culture of accountability and responsibility in data governance while also protecting individual privacy.

Conclusion

In summary, generative AI's introduction into government organizations offers a revolutionary chance that goes beyond simple technology development and opens the door to a new era of effective, responsive administration. These organizations must place a high priority on putting strong frameworks in place to guarantee data privacy, regulatory compliance, and ethical standards as they negotiate the challenges of contemporary public service. Generative AI holds great promise for improving citizen involvement, streamlining procedures, and enhancing service delivery, but it comes with an inherent obligation to protect human rights and social values.

The discourse around the deployment of these e-technologies by governments needs to place a strong emphasis on accountability and transparency. In addition to increasing public confidence, this will guarantee that the advantages of generative AI are dispersed fairly across all groups. A holistic strategy that strikes a balance between innovation and ethical considerations can be formed by promoting cooperation between government agencies, innovators in the commercial sector, and civil society. In the end, generative AI's effectiveness in the public sector will hinge on a dedication to ongoing assessment and modification, guaranteeing that it acts as a catalyst for good rather than a cause of injustice or harm.

Authors

References

AI for the People: Use Cases for Government Citation Fagan, Mark. “AI for the People: Use Cases for Government.” M-RCBG Faculty Working Paper Permanent Link Terms of Use. https://www.hks.harvard.edu/sites/default/files/centers/mrcbg/working.papers/M-RCBG%20Working%20Paper%202024-02_AI%20for%20the%20People.pdf.

Ahmed, Abdullah. “HIPAA Compliance - a Comprehensive Guide for Healthcare Organizations - Walturn Insight.” Walturn.com, 2024, www.walturn.com/insights/hipaa-compliance-understanding-and-mitigating-risks-in-healthcare-data-privacy.

“Artificial Intelligence in Government Policies | the Official Portal of the UAE Government.” U.ae, u.ae/en/about-the-uae/digital-uae/digital-technology/artificial-intelligence/artificial-intelligence-in-government-policies.

“Cyber Safety and Digital Security - the Official Portal of the UAE Government.” U.ae, u.ae/en/information-and-services/justice-safety-and-the-law/cyber-safety-and-digital-security.

“Cyber Security Council UAE.” Cybersecurityintelligence.com, 2024, cybersecurityintelligence.com/cyber-security-council-uae-9648.html.

“Data Protection Laws - the Official Portal of the UAE Government.” U.ae, 13 May 2024, u.ae/en/about-the-uae/digital-uae/data/data-protection-laws.

“Dubai 10x Initiative UAE.” Dubai Future Foundation, www.dubaifuture.ae/initiatives/future-design-and-acceleration/dubai-10x.

“Federal Register :: Request Access.” Unblock.federalregister.gov, www.federalregister.gov/documents/2024/04/11/2024-07629/guidance-on-use-of-artificial-intelligence-based-tools-in-practice-before-the-united-states-patent.

FedRAMP ® CSP Authorization Playbook. https://www.fedramp.gov/assets/resources/documents/CSP_Authorization_Playbook.pdf

Ferrara, Emilio. FAIRNESS and BIAS in ARTIFICIAL INTELLIGENCE: A BRIEF SURVEY of SOURCES, IMPACTS, and MITIGATION STRATEGIES. No. 2, 2023, arxiv.org/pdf/2304.07683.

“General Data Protection Regulation (GDPR) – Final Text Neatly Arranged.” General Data Protection Regulation (GDPR), 2018, gdpr-info.eu/issues/privacy-impact-assessment/.

Mohta, Bhavicka. “Understanding CCPA: A Deep Dive into California’s Data Privacy Legislation - Walturn Insight.” Walturn.com, 2024, www.walturn.com/insights/understanding-ccpa-a-deep-dive-into-california-s-data-privacy-legislation.

National Program for Artificial Intelligence. UAE National Strategy for Artificial Intelligence 2031. ai.gov.ae/wp-content/uploads/2021/07/UAE-National-Strategy-for-Artificial-Intelligence-2031.pdf.

NIST. “The NIST Cybersecurity Framework (CSF) 2.0.” The NIST Cybersecurity Framework (CSF) 2.0, vol. 2.0, no. 29, 26 Feb. 2024, nvlpubs.nist.gov/nistpubs/CSWP/NIST.CSWP.29.pdf, https://doi.org/10.6028/nist.cswp.29.

Office of Privacy and Civil Liberties. “Overview of the Privacy Act: 2020 Edition.” Www.justice.gov, 14 Oct. 2020, www.justice.gov/opcl/overview-privacy-act-1974-2020-edition/introduction.

robin.materese@nist.gov. “Standards.” NIST, 30 June 2016, www.nist.gov/standards.

Straub, Vincent J., et al. “Artificial Intelligence in Government: Concepts, Standards, and a Unified Framework.” Government Information Quarterly, vol. 40, no. 4, 1 Oct. 2023, p. 101881, www.sciencedirect.com/science/article/pii/S0740624X23000813, https://doi.org/10.1016/j.giq.2023.101881.

The White House. “Blueprint for an AI Bill of Rights.” The White House, The White House, Oct. 2022, www.whitehouse.gov/ostp/ai-bill-of-rights/.

Wes. “Dubai Electronic Security Center Launches the Dubai AI Security Policy - DESC.” DESC, 20 Sept. 2024, www.desc.gov.ae/dubai-electronic-security-center-launches-the-dubai-ai-security-policy/.

Yağmur ŞAHİN, and İbrahim DOGRU. “An Enterprise Data Privacy Governance Model: Security-Centric Multi-Model Data Anonymization.” Uluslararası Mühendislik Araştırma ve Geliştirme Dergisi, 15 Apr. 2023, https://doi.org/10.29137/umagd.1272085.