Comparing Keywords AI and Helicone

Summary

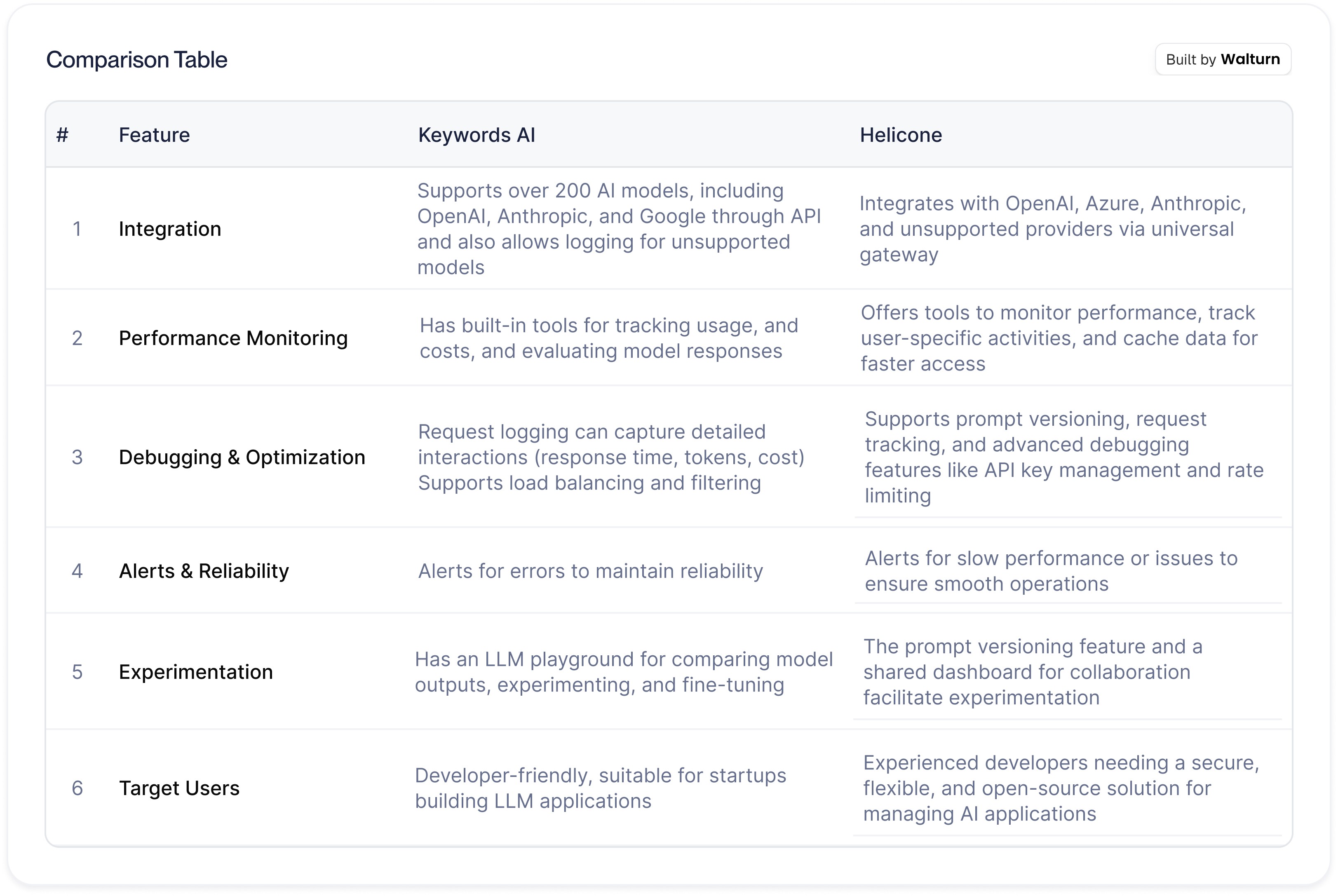

Keywords AI and Helicone are two leading LLM monitoring platforms that allow developers to track, debug, and optimize their large language model (LLM). Keywords AI offers integration with over 200 models and provides comprehensive logging tools, while Helicone is open-source and focuses on flexibility, which means more support for AI providers with features like rate limiting and session tracking.

Key insights:

LLM Monitoring Importance: This improves their performance, and prevents hallucinations, by detecting prompt hacking.

Keywords AI: Keywords AI connects developers to over 200 models and provides tools for various tasks. It also supports major SDKs like OpenAI and LangChain.

Helicone Features: Helicone offers an open-source platform with increased flexibility, enhanced security features, and custom property labeling, making it suitable for a wide range of AI applications.

Pricing Structures: Both platforms provide free tiers for small teams and scalable, usage-based pricing for larger operations. Keywords AI focuses on logs and Helicone on requests.

Enterprise Solutions: Both platforms offer enterprise-level packages that include compliance with standards like SOC-2 and HIPAA. This ensures high levels of security for larger organizations.

Introduction

Monitoring Large Language Models (LLMs) is important in industries like customer service and fraud detection, where these AI systems boost productivity by enhancing reliability, security, and efficiency. Effective LLM monitoring involves assessing the performance of models like GPT, Claude, and LLaMa to uncover issues like hallucinations, security risks, or degradation in accuracy. Tools like Keywords AI and Helicone are prominent platforms in this space, offering comprehensive LLM monitoring and debugging solutions.

This insight aims to provide a comparison between Keywords AI and Helicone to help readers understand which platform best suits their needs.

LLM Monitoring

1. What is LLM Monitoring?

LLM monitoring, also known as LLM observability, involves continuously tracking and analyzing the performance and behavior of LLMs. As businesses increasingly rely on LLMs for critical tasks, it becomes essential that these models operate smoothly and securely in production. However, due to the inherent complexity of LLMs, they are vulnerable to issues such as hallucinations, performance degradation, and potential security vulnerabilities.

LLM monitoring addresses several key challenges. For example, it helps detect hallucinations where the model generates incorrect or misleading information. This is particularly important in industries that require high accuracy and dependability. Another significant concern is prompt injection, a form of prompt hacking where users manipulate the model into producing outputs that violate its intended operational boundaries.

LLM monitoring tools help safeguard against such attacks, ensuring that the models follow strict ethical and safety guidelines.

2. Benefits of LLM Monitoring

LLM monitoring offers a range of benefits that increase the reliability and performance of AI tools. One of the primary advantages is its ability to track and analyze model outputs in real-time, helping detect performance issues such as latency, model degradation, or hallucinations. By identifying these concerns early, developers can promptly address them, making sure that the AI tools remain accurate and reliable in production environments. This is especially crucial in sensitive areas such as customer service, fraud detection, or legal analysis.

Another benefit of LLM monitoring is its role in enhancing the security of AI applications. LLMs are vulnerable to attacks such as prompt injection or model manipulation, where users get the model into generating prohibited content. With active monitoring, businesses can better safeguard against exploitation, ensuring that sensitive data remains protected. This proactive approach to security is particularly vital in industries like finance, healthcare, and law, where data breaches or inaccurate outputs can have serious consequences.

This also contributes to better cost management and operational efficiency. Tracking model usage and performance can allow organizations to optimize resource allocation, minimize downtime, and reduce operational costs. Monitoring tools also provide insights into how models are used, allowing teams to fine-tune them, which can also reduce overall costs. Additionally, transparency in LLM operations builds trust with users and stakeholders, as it offers accountability in AI decision-making.

Core Features

1. Keywords AI

Keywords AI is an LLM observability platform that streamlines the development and monitoring of AI applications for developers. With a straightforward integration process, it provides access to over 200 AI models, including those from OpenAI, Anthropic, and Google, through its API. The platform is designed to emphasize uptime by using fallback models. Developers can easily compare model responses, evaluate AI performance, and track usage and costs with built-in tools and its dashboard. Keywords AI also offers a logging mechanism that ensures that logging does not interfere with application performance, which makes it suitable for latency-sensitive projects.

One of the features of Keywords AI is its suite of tools for debugging and optimization. Request logging automatically captures detailed user interactions, including response time, tokens, and cost, which allows developers to analyze performance and troubleshoot issues quickly. The platform also supports load balancing and distribution of requests across models to reduce latency and avoid request limit errors. Keywords AI also allows developers to optimize performance by caching common requests and filtering models based on specific use cases, such as image or vision functionality. The platform’s ability to version prompts, along with support for A/B testing, gives developers a way to improve LLM outputs without disrupting production.

Keywords AI also includes evaluation and monitoring tools. Developers can assess model responses based on various criteria, such as precision, readability, relevance, and sentiment, through Continuous Eval integration. The LLM playground also allows teams to experiment with and compare model outputs, which facilitates the creation of higher-quality datasets for fine-tuning. Alerts notify teams of errors, allowing them to promptly resolve concerns and maintain reliability. Overall, Keywords AI offers a comprehensive and developer-friendly platform, making it easier for startups to build, monitor, and optimize LLM applications

2. Helicone

Helicone is an open-source observability platform that streamlines the monitoring, debugging, and optimization of large language models. It integrates seamlessly with popular AI providers such as OpenAI, Azure, and Anthropic. Helicone also provides compatibility with unsupported providers via its universal gateway feature. This flexibility makes it a valuable tool, allowing developers to work with a wide variety of models with additional challenges. Key features include the ability to label requests with custom properties, create and version prompts, and use the Request API to track user-specific activities, improving both debugging efficiency and cost management.

Additionally, Helicone offers a suite of advanced tools to improve the security of AI applications. Features like user rate limiting and API key management allow developers to control access, preventing abuse while maintaining fair usage. The platform also supports caching, which allows faster access to frequently requested data. Alerts for issues like slow performance enable quick responses, ensuring the AI tools remain reliable. Furthermore, with secure API key vaults and log omission, Helicone improves the security of AI operations.

Helicone also provides developers with tools for fine-tuning and experimenting with their models. The platform supports prompt versioning and analysis. This makes it easier for developers to experiment with and optimize model outputs. Additionally, Helicone’s customer portal allows teams and clients to access shared dashboards with custom permissions. Overall, Helicone stands out as a comprehensive solution for managing LLMs efficiently and securely.

Supported models

Both platforms value custom model integration and offer extensive support for a wide range of LLMs, allowing developers to work with and log requests for a wide range of models. The flexibility offered by both platforms ensures that developers can utilize the most suited models for their specific needs, which makes both Keywords AI and Helicone powerful tools for LLM management.

1. Keywords AI

Keywords AI provides access to over 200 LLMs, including OpenAI, Anthropic, and Google models, all through its API. It also allows the integration of custom models by logging outputs through its logging endpoint. This flexibility allows developers to track and monitor the default models (provided by keywords AI) and also their open-source models, ensuring seamless operations.

2. Helicone

Helicone also supports a variety of models, including ones from OpenAI, Anthropic, Azure, and Cohere, offering comprehensive integration capabilities. This allows developers to easily track usage and performance for streaming and non-streaming requests. The automated mapping system ensures unsupported models can also be used by detecting and assigning the appropriate schema within a day. For increased flexibility, Helicone also provides support for custom models, enabling developers to integrate open-source models. Open-source models may include options like GPT-Neo or LLaMA, through its NodeJS SDK.

Pricing Structure

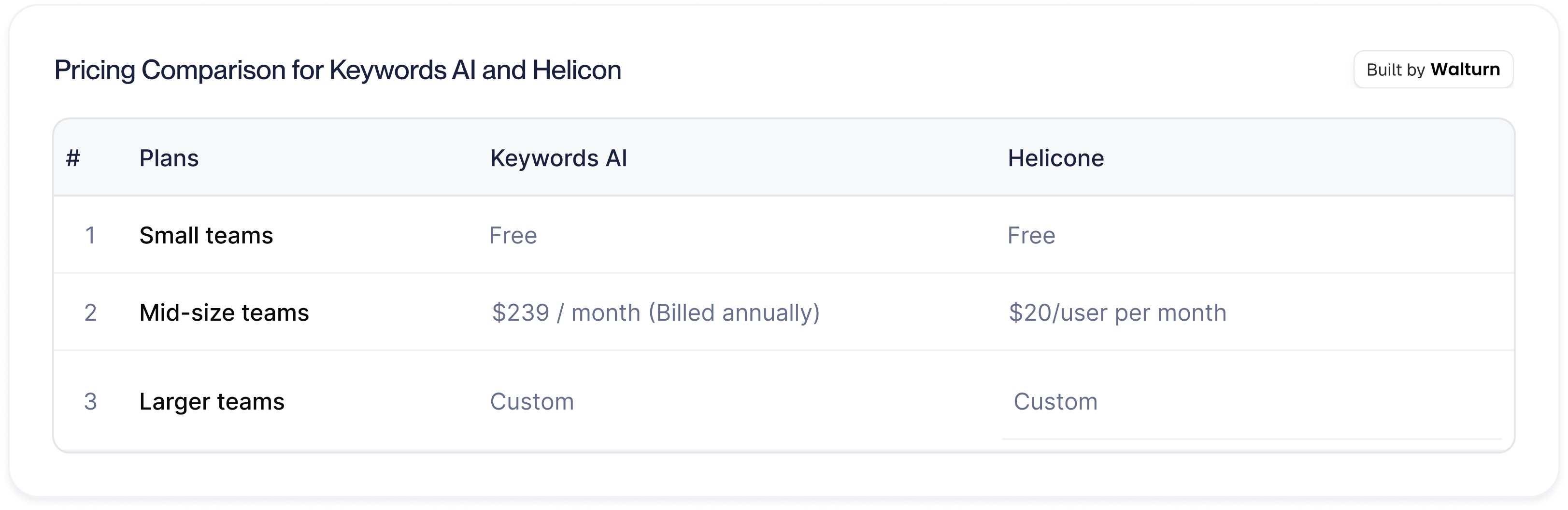

Keywords AI offers a range of pricing plans that are designed to accommodate users at various stages. The free plan aimed at independent developers and smaller teams offers 10k logs, access for up to two seats, and community support. The plan is free forever and provides an excellent starting point for smaller developers. As the team scales, they can opt for the team plan at $239 per month (when billed annually), which includes 1 million logs, and support for five team members among other benefits. The Enterprise plan is for larger teams which offers custom pricing and unlimited logs and seats, HIPAA and SOC-2 compliance among other perks

On the other hand, Helicone provides a flexible pricing model that allows users to start for free and scale as they grow. Helicone’s Free plan includes 10k free requests per month, access to the performance dashboard, and other essential features. The Pro plan, priced at $20 per user per month, adds advanced features like caching, datasets, and log retention. For larger customers, Helicone offers the Enterprise plan which provides SOC-2 compliance, single sign-on (SSO), and dedicated SLAs, with pricing being based on a custom quote.

Both platforms offer usage-based pricing models, which lets developers pay for what they use beyond the free tiers. While keywords AI provides some free logs and focuses on the observability of logs, Helicone’s pricing is oriented toward requests, with free requests offered initially and affordable rates for additional requests. Both platforms also provide enterprise-level solutions with custom features and dedicated support. Overall, the customers can expect the usage (and pricing) to increase along with their operations.

The difference in pricing can be traced to the focus of the platforms. Keywords AI focuses on and provides 1m logs with the subscription, while Helicone focuses on and provides 100k requests with the subscription. The difference between these may explain the difference in pricing. Both platforms, however, expect the users to pay separately if the provided logs/requests are exceeded.

Conclusion

Keywords AI and Helicone both offer comprehensive LLM monitoring solutions with features tailored to their custom needs. While Keywords AI is focused on the ease of integration, Helicone emphasizes flexibility and enhanced security. Their pricing models allow developers to start small and scale as required, making both platforms strong options for optimizing and safeguarding LLM applications.

Authors

Find the Perfect LLM Monitoring Solution for Your Needs

Choosing the right platform to monitor, optimize, and secure your Large Language Model (LLM) is crucial for successful AI deployments. Whether you prioritize broad model access with Keywords AI or value the flexibility and open-source benefits of Helicone, Walturn has the expertise to guide you through it all. Our tailored consulting services will help you select and implement the best-fit LLM monitoring solution, ensuring your AI models perform reliably and securely.

References

Ahmed, Abdullah. “Top Prompt Management Tools - Walturn Insight.” Walturn.com, 2024, www.walturn.com/insights/top-prompt-management-tools

Datadog. “What Is LLM Observability & Monitoring? | Datadog.” Datadog, 2024, www.datadoghq.com/knowledge-center/llm-observability/

“Getting User Requests - Helicone OSS LLM Observability.” Helicone.ai, Helicone OSS LLM Observability, 2024, docs.helicone.ai/use-cases/getting-user-requests

“Introduction - Helicone OSS LLM Observability.” Helicone.ai, Helicone OSS LLM Observability, 2024, docs.helicone.ai/getting-started/quick-start

“Overview - Docs.” Keywordsai.co, Docs, 2023, docs.keywordsai.co/get-started/overview