OpenAI announces GPT-4 Turbo

Summary

At OpenAI's DevDay, significant advancements were announced, including GPT-4 Turbo with enhanced capabilities, a 128K context window, and cost reductions. New developments in function calling and the Assistants API were unveiled, alongside multimodal functionalities like image processing and Text-to-Speech. OpenAI also emphasized customization with GPT-4 fine-tuning, and supported the community with open-source contributions like Whisper large-v3

Key insights:

Launch of GPT-4 Turbo with significant model improvements and cost efficiency.

Introduction of the Assistants API and enhancements in function calling.

Expansion into multimodal functionalities with vision capabilities and Text-to-Speech.

Commitment to customization and community support through open-source contributions.

Introduction

At OpenAI's much-anticipated DevDay conference, a range of innovative advancements was announced, promising a transformation in AI capabilities.

The range of new introductions include model improvements, new API features, and considerable cost reductions across various elements of the platform.

Check out this article for major highlights and key updates announced during this event.

GPT-4 Turbo: Advancements

The spotlight shone on the unveiling of GPT-4 Turbo, a remarkable advancement in AI technology. This model stands out for its capability, economic feasibility, and an extended context window of 128K, signaling a significant stride in enhancing text-based AI models:

Enhanced Knowledge Base: GPT-4 Turbo reflects knowledge spanning up to April 2023, boasting an extensive understanding of global events and developments.

Contextual Expansion: With a context window accommodating over 300 pages of text in a single prompt, the model demonstrates a leap in comprehensiveness.

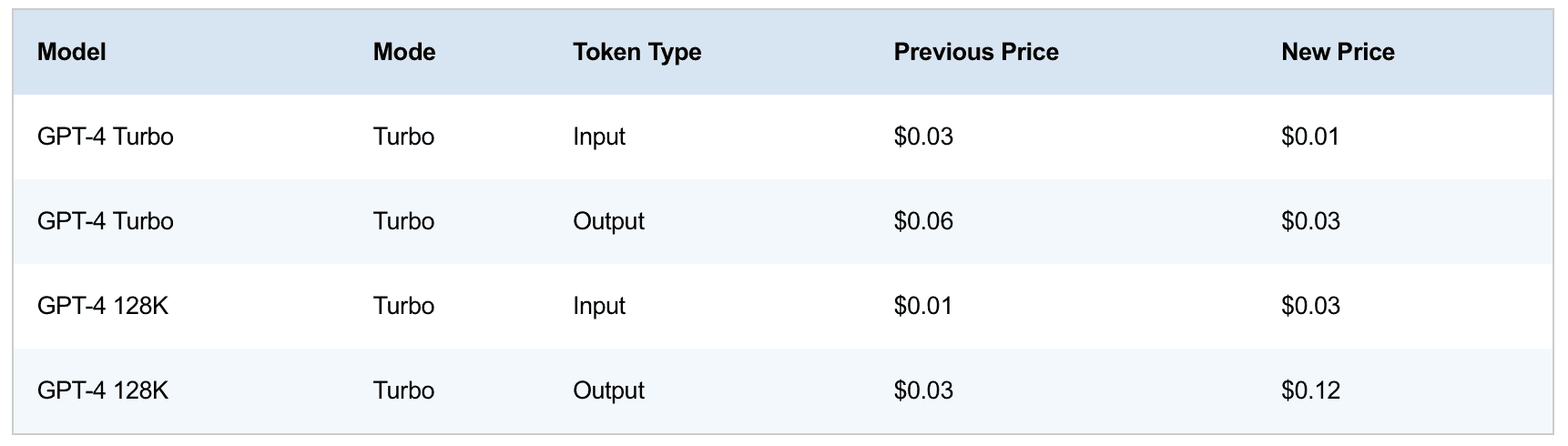

Cost Efficiency: GPT-4 Turbo offers developers a cost-effective solution, providing input tokens at 3x cheaper rates and output tokens at 2x cheaper rates compared to the preceding GPT-4 model.

Function Calling and Model Updates

Function calling received a series of improvements, enhancing the model's ability to output JSON objects containing arguments. Notable updates include the ability to execute multiple functions within a single message and significantly improved function-calling accuracy with GPT-4 Turbo.

Additionally, the update to GPT-3.5 Turbo introduces a 16K context window by default, ensuring better instruction following, JSON mode support, and parallel function calling. This upgrade shows a 38% improvement in tasks like generating JSON, XML, and YAML.

Assistants API

The introduction of the Assistants API empowers developers to create agent-like experiences within their applications.

Assistants are purpose-built AI entities capable of executing specific instructions, accessing extra knowledge, and calling models and tools to accomplish tasks.

With the Assistants API, OpenAI aims to simplify high-quality AI app development by handling much of the heavy lifting for developers.

This API facilitates persistent and infinitely long threads, enabling seamless handling of conversation contexts.

Moreover, assistants can use tools such as Code Interpreter, Retrieval, and Function Calling for varied applications.

New Modalities in the API

OpenAI expanded its AI capabilities with the introduction of multimodal functionalities, including GPT-4 Turbo's vision, DALL·E 3, and Text-to-Speech (TTS). GPT-4 Turbo's vision feature allows image input, enabling tasks like generating captions and analyzing real-world images.

DALL·E 3, available to ChatGPT Plus and Enterprise users, helps in programmatically generating images for various applications. The Text-to-Speech API allows developers to convert text to high-quality speech using multiple preset voices.

Customization, and Copyright

OpenAI further solidified its commitment to customization by launching the GPT-4 fine-tuning experimental access and a Custom Models program, ensuring organizations can create bespoke GPT-4 models tailored to specific domains.

Cost reductions across several models, offering decreased token prices, and the introduction of higher rate limits aim to enable developers to scale their applications more effectively.

Additionally, OpenAI's pledge to safeguard customers through the Copyright Shield promises to step in and defend customers facing copyright infringement claims.

OpenAI's Open Source Contributions

OpenAI's commitment to open source continues with the release of Whisper large-v3, an improved open-source automatic speech recognition model. The open-sourcing of the Consistency Decoder further contributes to the AI community by enhancing image processing.

The unveiled features at OpenAI's DevDay conference not only mark a substantial leap in AI technology but also solidify OpenAI's dedication to empowering developers with more accessible, customizable, and safeguarded AI solutions.