Five Practical Tips for Designing Impactful and Safe AI Solutions for Learners

Artificial Intelligence

Design

Education

Summary

This article shares five practical strategies for designing AI solutions that are both impactful and safe for learners. It highlights the importance of data privacy, personalization, transparency, inclusivity, and continuous improvement. Backed by research, it emphasizes how AI tools can enhance learning experiences while addressing ethical and technical challenges, making them more effective, trusted, and widely adopted.

Key insights:

Prioritize Data Privacy and Ethics: Upholding data privacy and ethical standards is essential in education. This requires a conservative approach and a commitment to fairness.

Ensure Transparency in AI: Following Explainable AI (XAI) principles makes it easier for users to trust educational AI.

Use Appropriate Data for Training: Training AI chatbots on age and ability-appropriate datasets is crucial for creating safe and relevant learning experiences.

Implement Feedback Loops for Improvement: Gathering diverse user feedback accelerates AI improvement and ensures equitable impact.

Introduction

The proliferation of artificial intelligence (AI) solutions in education presents tremendous opportunities to enhance learning experiences, but it also brings unique risks. Some of the key promises include more widely available personalization and accessibility, the automation of administrative tasks and some content creation. However, Stanford Graduate School of Education Dean Daniel Schwartz warns that AI will be used to automate some very bad ways of teaching, meaning that we will not achieve preferable results without surveying our teaching methodologies and being extremely mindful about how we design, test, and implement Artificial Intelligence in education.

AI tools also pose challenges concerning data privacy and user trust. Because of the far-reaching consequences and the issue’s global scale, some experts call for transnational regulations concerning learners’ privacy. However, legislation is lagging behind technology’s rapid development, often accompanied by uncertainty as to which official body should regulate the use of AI in education. Partially due to these regulatory concerns around user privacy and ethics, and exacerbated by a lack of crucial AI literacy skills, educators’ mistrust is also a barrier to the adoption of AI tools in education.

So what is needed to overcome privacy, ethical, and methodological concerns to build impactful and safe AI solutions for learning?

Five Practical Tips for Designing Impactful and Safe AI Solutions

While in today’s rapidly technological landscape and difficult economy, it is tempting to go fast and position yourself as a pioneer, our advice is to try and balance caution and speed. Emphasizing a safe and methodologically sound approach to AI development will help you build robust products that inspire trust and will ultimately last longer than solutions that hit the market without being truly ready.

Below, we introduce five steps for you to consider when designing and launching your AI capabilities for learning.

1. Prioritize Data Privacy and Develop an Ethical Product

In education, where personal data like academic performance and demographics are involved, ensuring data privacy and fairness are crucial. However, relevant frameworks and regulations are not consolidated yet, which can make it challenging to ensure that your product is, and will remain compliant in all of your markets.

We advise adopting a conservative approach and ensuring that you uphold the principles of Trustworthy AI. Additionally, making a conscious effort to keep up to date on AI regulations and prioritizing users’ privacy and safety will go a long way in winning your users’ and buyers’ trust and safeguarding your product. For example, a key ethical challenge in using AI tools in education is ensuring fairness and avoiding bias. Mistakes in this regard will likely be inevitable, at least initially but by communicating that ethical considerations are very important to you and by taking incident reports and relevant research findings very seriously, you can balance delivering fast and prioritizing your users’ wellbeing and safety.

2. Ensure Transparency in AI Decisions

AI models are generally referred to as “black boxes” because usually, even their creators find them too complex to fully understand. If some of the world’s smartest researchers can’t explain how a neural network works, how can educators and parents trust such AI-enabled tools? This explains a call for Explainable AI (XAI) models that present how and why decisions were made when constructing a model, as well as what the expected impact is and what biases may be present.

We encourage product designers in the EdTech space to prudently document their AI model-building process and uphold XAI principles during the development process. While this may sound daunting if your organization needs more resources, this approach is critical for effective, and safe applications of AI in education. This comprehensive paper is worth reading for a deeper understanding of key requirements, applications, and common pitfalls.

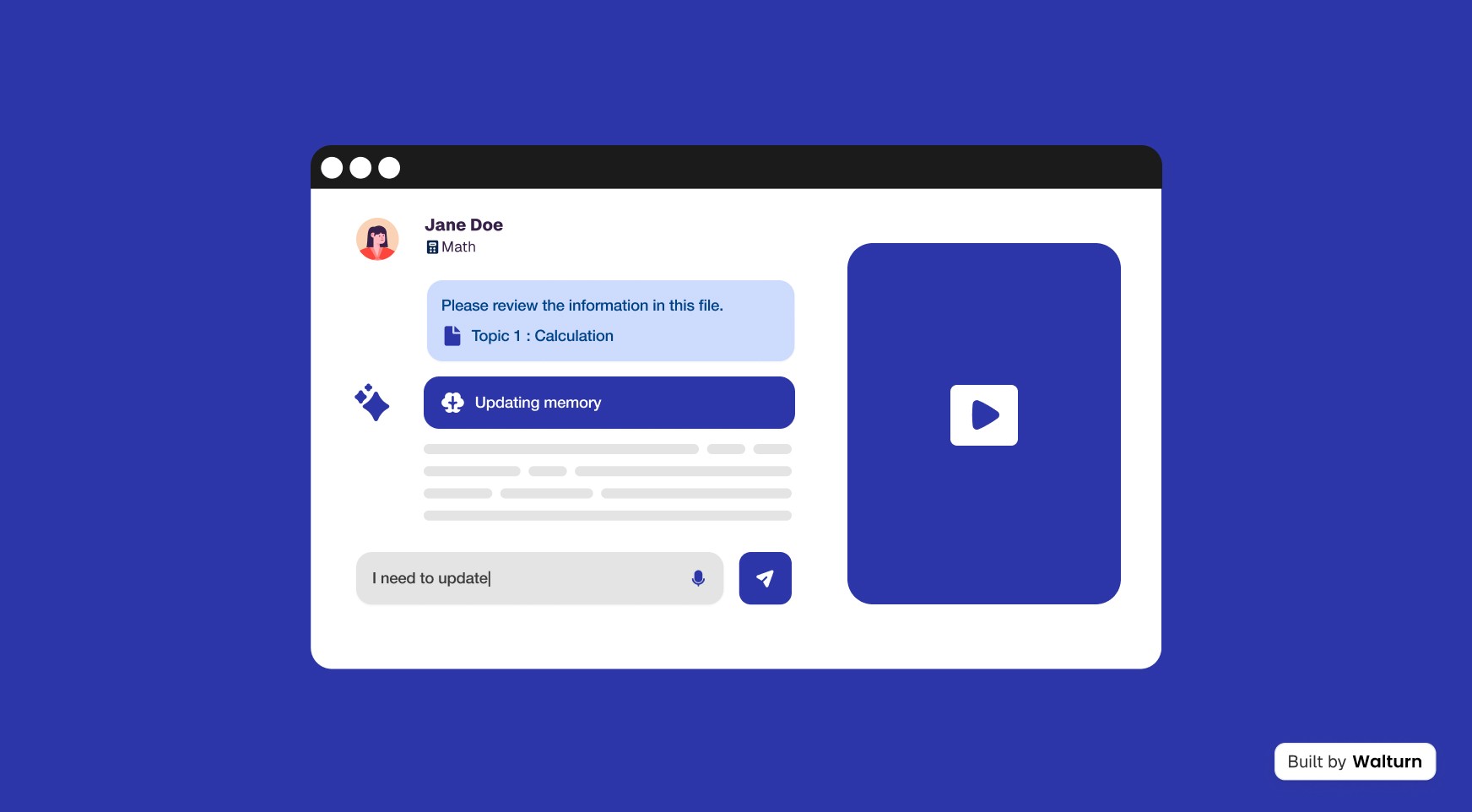

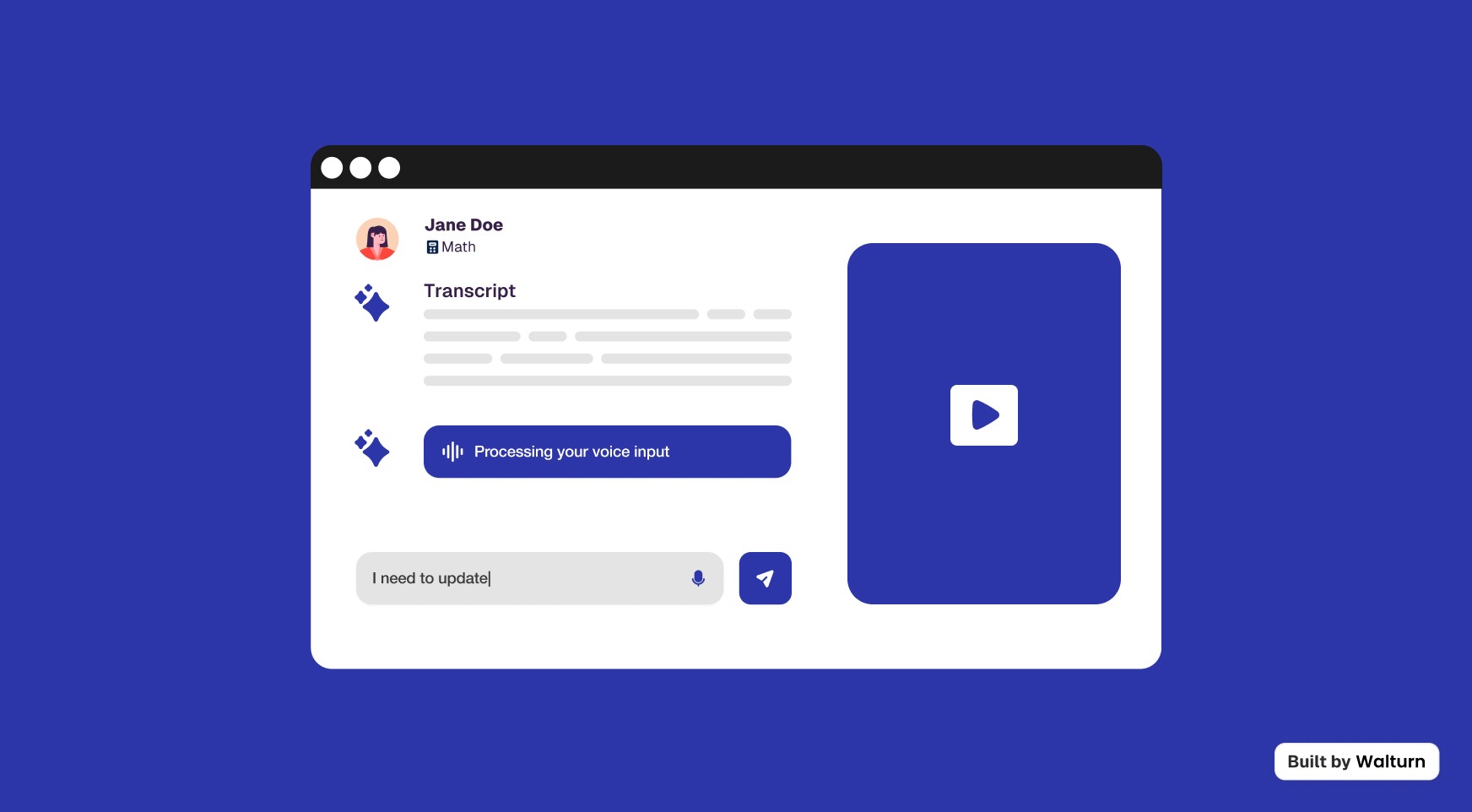

3. Train Your Models in Appropriate Communications

AI chatbots and tutors can provide highly personalized learning experiences, which have been shown to improve student outcomes. One prime example of this is Carnegie Learning’s LiveHint, a tutoring solution developed based on 25 years of research and the experiences of 5.5 million students tackling over 1.2 billion math problems, which recently won the EdTech Breakthrough Award’s AI Innovation Award. Khanmigo, Khan Academy’s AI tutor is another great example of a critically acclaimed chatbot empowering learners.

While AI chatbots hold lots of promise in personalizing learning and helping students achieve more, their development requires careful consideration. One key concern is what data a model is trained on - poor quality data can undermine its validity and expose learners to inappropriate and unsafe language. Beyond being mindful of tone and language, it is also important to consider accessibility issues and avoid ableist or otherwise discriminatory language. Therefore, picking an age and development-appropriate training data set is critical, which is not trivial to manage even for experienced players in the education market. So whenever possible, we advise partnering with reputable research teams or integrating an existing age and ability-appropriate Large Language Model to ensure high quality and safety in your AI-enabled tutoring tools.

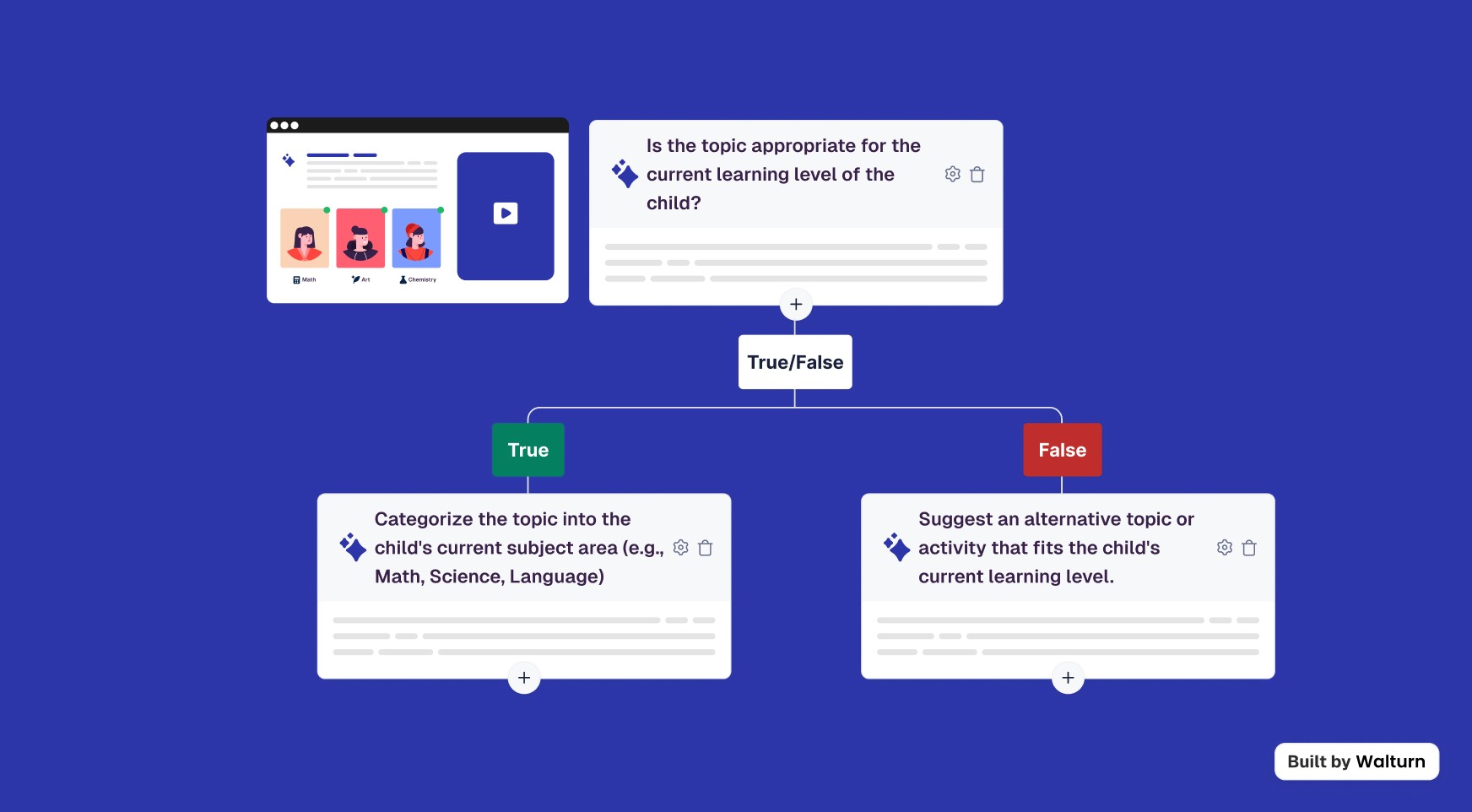

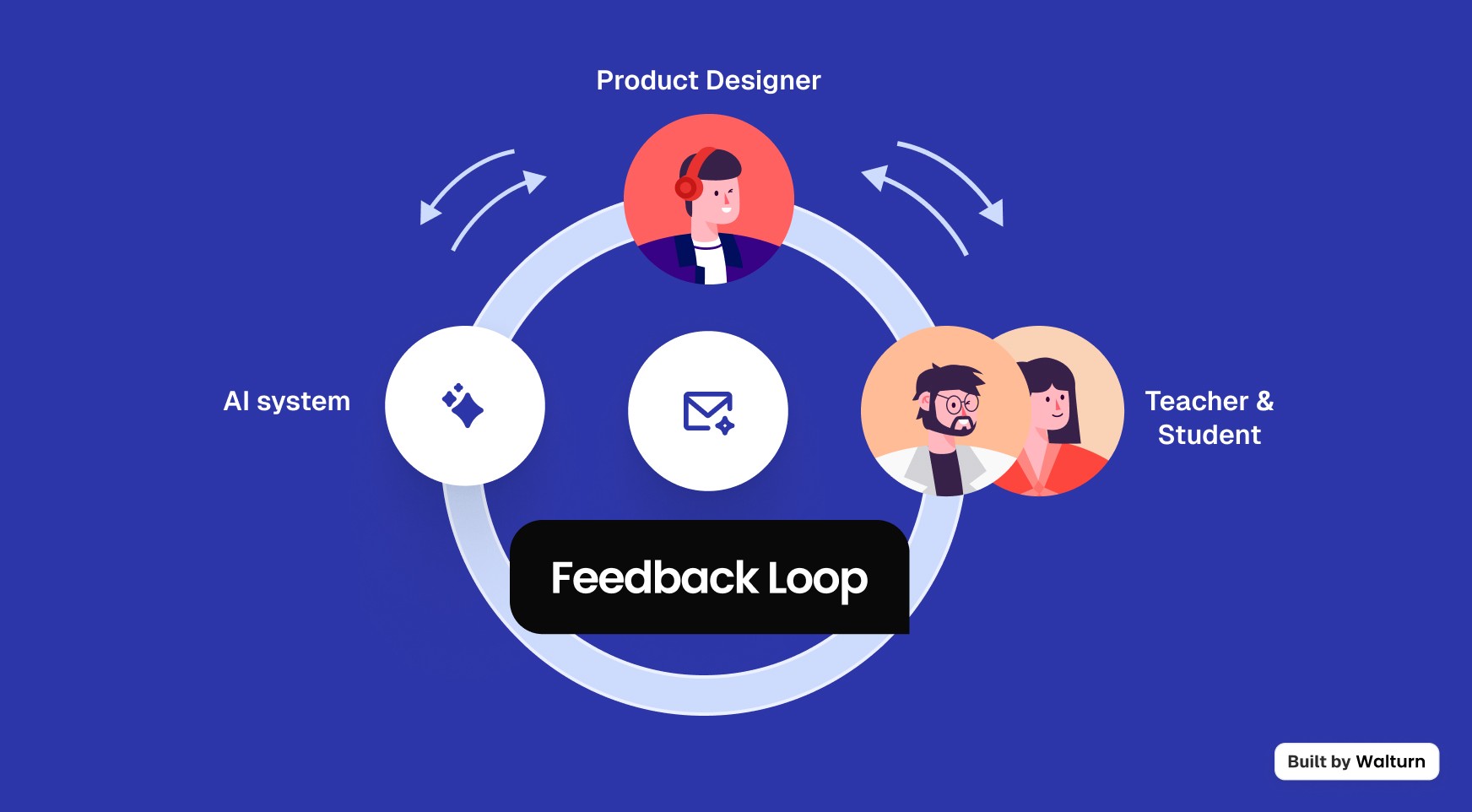

4. Build Feedback Loops for Continuous Improvement

We are still in the early stages of implementing AI-enabled solutions in education and continuous feedback and iteration are essential for refining new tools and ensuring safe, fair, and impactful learning experiences for all users. Implementing robust feedback loops into your product will help you improve your AI tool much quicker, potentially bringing even more users and in turn, even more improvement. However, The Bill and Melinda Gates Foundation asserts that looping end-users into the design process more directly is critical for realizing AI’s potential gains equitably.

User feedback has gained more and more importance in product development over the years and will be an indispensable tool in creating impactful, equitable learning experiences with AI. We encourage you to build automatic and minimally invasive feedback loops into your products and think very carefully about representation when including your end-users in the design process. If you can, make sure to ask for feedback from learners and users from diverse backgrounds, as well as leading experts in your field. Make sure that commitment to doing the right thing and frequent iteration to improve equity and safety are core to the way you operate.

5. Design for Inclusivity and Accessibility

AI solutions must account for the diverse needs of learners. UNESCO has developed a comprehensive set of guidelines to help governments and organizations cater to the needs of the approximately 1 billion people who live with one or more disabilities worldwide. Many countries in the world have also become more ethnically diverse, making ethnic and cultural inclusion more globally relevant, too. The World Economic Forum points out that significant volumes of data sets that we currently use for developing AI models may contain historical biases and distortions, which can lead to exacerbating current inequalities in accessing high-quality learning.

A growing awareness and acceptance of different backgrounds, abilities, and even sexual orientations has added a new layer to the work of product designers. While it can be daunting to learn about all different accessibility and inclusivity guidelines and to cater to the needs of so many types of people, the intention to do so is critical if we want to work towards a more equitable world - which, as edtech entrepreneurs and educators, I hope we all do. That said, it is ok to start small and gradually work towards a more and more inclusive solution. Just make sure to be aware of your product’s shortcomings and educate yourself on why inclusion matters so much in AI-enabled solutions.

Conclusion

The future of AI in education is filled with promise, but as we’ve discussed, it also carries significant responsibility. Ensuring that AI solutions are both impactful and safe requires a thoughtful, ethical approach to their design and implementation. From prioritizing data privacy and ethical development to ensuring transparency, inclusivity, and the use of high-quality training data, every step must be carefully considered. AI’s potential to revolutionize education will only be realized if we continuously iterate based on feedback, involve diverse users in the design process, and remain committed to equity.

By adopting these five practical tips—emphasizing trust, safety, and inclusivity—you can develop AI tools that not only meet today’s needs but also stand the test of time. In a landscape where the pressure to innovate quickly is strong, taking the time to get it right will ultimately ensure that your AI solutions deliver meaningful, lasting impact for learners worldwide.

References

Berendt, Bettina, et al. “AI in Education: Learner Choice and Fundamental Rights.” Learning, Media and Technology, vol. 45, no. 3, 2 July 2020, pp. 312–324, https://doi.org/10.1080/17439884.2020.1786399.

Bloss, Eden. “Carnegie Learning Announces LiveHint AITM.” Businesswire.com, 30 Nov. 2023, www.businesswire.com/news/home/20231130974040/en/. Accessed 20 Oct. 2024.

---. “Carnegie Learning’s LiveHint AITM Wins “AI Innovation Award” in 2024 EdTech Breakthrough Awards Program.” Businesswire.com, 6 June 2024, www.businesswire.com/news/home/20240606258349/en. Accessed 20 Oct. 2024.

Chambers, Dianne, and Zeynep Varoglu. “Revised Guidelines on the Inclusion of Learners with Disabilities in Open and Distance Learning (ODL).” Unesco.org, 12 Dec. 2023, www.unesco.org/en/articles/revised-guidelines-inclusion-learners-disabilities-open-and-distance-learning-odl. Accessed 24 Oct. 2024.

Chen, Claire. “AI Will Transform Teaching and Learning. Let’s Get It Right.” Stanford HAI, Stanford University, 9 Mar. 2023, hai.stanford.edu/news/ai-will-transform-teaching-and-learning-lets-get-it-right.

“Daniel Schwartz’s Profile | Stanford Profiles.” Profiles.stanford.edu, profiles.stanford.edu/daniel-schwartz. Accessed 20 Oct. 2024.

Díaz-Rodríguez, Natalia, et al. “Connecting the Dots in Trustworthy Artificial Intelligence: From AI Principles, Ethics, and Key Requirements to Responsible AI Systems and Regulation.” Information Fusion, vol. 99, no. 101896, 1 Nov. 2023, p. 101896, www.sciencedirect.com/science/article/pii/S1566253523002129.

“Ethical Considerations in Educational AI | European School Education Platform.” School-Education.ec.europa.eu, 25 Apr. 2024, school-education.ec.europa.eu/en/discover/news/ethical-considerations-educational-ai. Accessed 24 Oct. 2024.

Hagiu, Andrei, and Julian Wright. “To Get Better Customer Data, Build Feedback Loops into Your Products.” Harvard Business Review, 11 July 2023, hbr.org/2023/07/to-get-better-customer-data-build-feedback-loops-into-your-products.

IBM. “What Is Explainable AI? | IBM.” IBM, 2024, www.ibm.com/topics/explainable-ai.

Khosravi, Hassan, et al. “Explainable Artificial Intelligence in Education.” Computers and Education: Artificial Intelligence, vol. 3, no. 3, 2022, p. 100074, https://doi.org/10.1016/j.caeai.2022.100074.

Kooli, Chokri. “Chatbots in Education and Research: A Critical Examination of Ethical Implications and Solutions.” Sustainability, vol. 15, no. 7, 23 Mar. 2023, p. 5614, www.mdpi.com/2071-1050/15/7/5614, https://doi.org/10.3390/su15075614.

“Meet Khanmigo, Khan Academy’s AI-Powered Teaching Assistant & Tutor.” Khanmigo.ai, www.khanmigo.ai.

Mehrabi, Ninareh, et al. “A Survey on Bias and Fairness in Machine Learning.” ACM Computing Surveys, vol. 54, no. 6, July 2021, pp. 1–35, https://doi.org/10.1145/3457607.

Nazaretsky, Tanya, et al. “Teachers’ Trust in AI-Powered Educational Technology and a Professional Development Program to Improve It.” British Journal of Educational Technology, vol. 53, no. 4, 9 May 2022, https://doi.org/10.1111/bjet.13232.

Ponomarov, Kostiantyn. “Global AI Regulations Tracker: Europe, Americas & Asia-Pacific Overview.” Legalnodes.com, 7 May 2024, legalnodes.com/article/global-ai-regulations-tracker.

Poushter, Jacob, and Janell Fetterolf. “How People around the World View Diversity in Their Countries.” Pew Research Center’s Global Attitudes Project, Pew Research Center’s Global Attitudes Project, 22 Apr. 2019, www.pewresearch.org/global/2019/04/22/how-people-around-the-world-view-diversity-in-their-countries/.

Suzman, Mark. “The First Principles Guiding Our Work with AI.” Bill & Melinda Gates Foundation, 21 May 2023, www.gatesfoundation.org/ideas/articles/artificial-intelligence-ai-development-principles.

Welker, Yonah. “Generative AI Holds Great Potential for Those with Disabilities - but It Needs Policy to Shape It.” World Economic Forum, 3 Nov. 2023, www.weforum.org/agenda/2023/11/generative-ai-holds-potential-disabilities/.

Wu, Rong, and Zhonggen Yu. “Do AI Chatbots Improve Student’s Learning Outcomes? Evidence from a Meta-Analysis.” British Journal of Educational Technology, vol. 55, no. 1, 3 May 2023, https://doi.org/10.1111/bjet.13334.

Zewe, Adam. “Unpacking Black-Box Models.” MIT News | Massachusetts Institute of Technology, 5 May 2022, news.mit.edu/2022/machine-learning-explainability-0505.